Because platforms such as Facebook or Twitter do little content monitoring, they’re a highly useful means of spreading disinformation. Under the cover of freedom of expression, unscrupulous actors spread false information according to three principles: speed, continuity and inconsistency. An effective way to quickly spread information is to control, manually or by computers, multiple false accounts and profiles on social media. This type of account is called a bot, and they can be found by the millions on social networks and are the main producers of large-scale misinformation.

To be effective, misinformation must spread quickly, before truthful and verifiable information is put online. Truth is not a determining factor, even though the majority of propaganda news contains some truth. Nevertheless, Harvard sociologist Kathleen Carley argues that false news travel six times faster on social media than real news. At the same time, Christopher Paul and Miriam Matthews show that multiple sources are more convincing than a single source, and that the frequency with which one receives this information is critical. Therefore, numbers matter.

On social media, accounts managed by Russia must be proactive, relaying the same information from several other accounts, personal or official, and accompany their information with videos and images as evidence. If a publication is shared, retweeted, liked or commented by a substantial number of other accounts, it seems more reliable and truthful than a publication with little interaction. Bots allow this transfer of information faster and more efficiently than if done manually. The fake accounts will then like, share, comment, retweet the publications of other fake accounts and create the illusion that the information is supported by thousands or millions of people, making it more “reliable” to the eye of actual readers.

In this regard, misinformation does not commit to defend a single story at one time. In practice, several pieces of misleading information are shared at the same time by several different accounts. If individuals do not subscribe to a false story, the bots stop sharing it. However, if community members share and begin to adhere to a false news, the bots will make sure to relay it as often as possible in a short period of time to multiply its reach across social media’s networks.

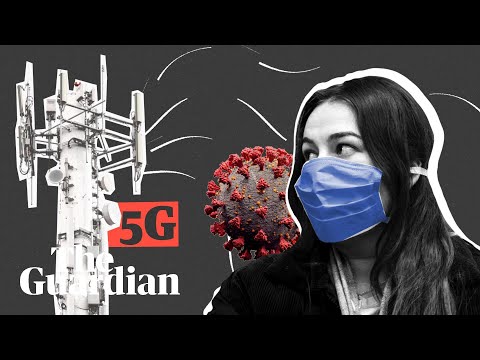

Disinformation and Covid-19

In the midst of the pandemic, sociologist Kathleen Mellon points out that bots are twice as active as studies have initially predicted. According to Stephanie Carvin, Carleton University and former national security analyst for the Canadian Security Intelligence Service, Russian bots are currently promoting two particularly dangerous theories:

- the creation of the Covid-19 in a laboratory as a biological weapon.

- the idea that the pandemic is being used to cover up the harmful effects of the new 5G towers, or that the towers themselves are spreading the virus.

Those theories put forward by the Russian bots appeared in early January, and the Chinese and Iranian bots joined them mid-march. Since then, the bots’ publications have far surpassed those of human-controlled accounts.

According to Kathleen Carley, bots are currently responsible for about 70% of the Covid-19 related activity on Twitter, and 45% of the accounts relaying information about the virus are bots. The audiences most receptive to these theories are currently anti-Vax activists, conspiracy theorists and technophobes.

The theory concerning the laboratory’s conception of the Covid-19 virus is different regarding to the bots that share the fake new. According to the public, the same story is being told, but the country of origin is changing. Some theories claim that the virus was developed in a Chinese laboratory in Wuhan, others that it was developed in a military laboratory in the United States before being transported and released in Wuhan.

A recent Ottawa poll conducted by Professor Sarah Evert reveals that this theory is supported by approximately 26% of Canadians. Using the Datametrex tool, Carleton University researchers were able to study more than five million publications that were then linked to Russian or Chinese bots on social medias.

Conspiracy theories associated with Covid-19 are particularly abundant since the scientific community’s knowledge of the virus remains limited. These theories can therefore create the illusion of filling the void left by science, or explaining what scientists cannot. Kathleen Carley also points out that these theories are particularly difficult to discredit since they are shared by a large number of people, including politicians and celebrities who have a much wider audience than scientists.

Regarding the 5G theory, the Carleton University poll reveals that about 11% of Canadians believe that Covid-19 is not a real or serious virus, but that the pandemic is being used to cover up the harmful effects of 5G towers on humans. Conspiracy theories associate the outbreak of the virus in China and its spread with the development of 5G, where China is acting as a technology pioneer. As a result, numerous acts of vandalism have taken place against telecommunications installations – 5G or not – all over Europe.

According to Sarah Evert of Carleton University, young people between the ages of 18 and 29 are more sensitive to conspiracy theories. The biggest supporters also have much more activity on social media than individuals who do not adhere to such theories. They share much more information about conspiracies than the majority of normal users and are also much more likely to discredit scientific advances or true information – by commenting extensively fake news for example – than users who do not subscribe to these theories. Forty-nine per cent of Canadians who believe that Covid-19 was created in a laboratory and 58% of Canadians who believe in the 5G theories said they could easily distinguish between false information and conspiracy theories from the real information.

Kathleen Carley offers some solutions to help identify misinformation. First, just because information is relayed by several thousands people or comes from several sources does not mean that it is necessarily true. More than 80% of misinformation comes from individuals and not from the traditional media, so the usual channels are generally reliable. Moreover, if a solution – a cure in the case of Covid-19 – seems too good to be true, it probably is. In the case of Covid-19, the most reliable sources remain scientists, such as those affiliated with the World Health Organisation or the Centers for Disease Control and Prevention. Social medias are infested with misinformation, which is widely propagated through YouTube images or videos by bots and fake accounts.

Rise of Russian disinformation

Why is there misinformation and why are the Russians are using it so profusely? Russian foreign policy is geared toward its own interests, and only its own interests. The use of disinformation is therefore aimed at serving those interests. If Russia is pushing theories against 5G, it is perhaps to slow down its deployment and harm the economic development of certain countries. In its international relations, Russia has no “friends”, only allies, and only as long as they serve Moscow’s interests. Disinformation is therefore part of this line of thinking, serving Russian interests at the expense of other nations.

Sophie Marineau, Doctorante en histoire des relations internationales / phD candidate History, International relations, Université catholique de Louvain.

This article first appeared on The Conversation.

Scroll has produced award-winning journalism despite violent threats, falling ad revenues and rising costs. Support our work. Become a member today.

In these volatile times, Scroll remains steadfastly courageous, nuanced and comprehensive. Become a Scroll Member and support our award-winning reportage, commentary and culture writing.